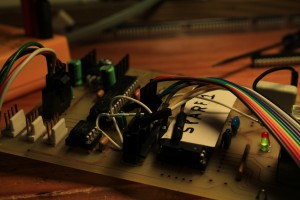

I've been designing a very simple new circuit to control the servos in the forthcoming new neck.

Each joint calls for a two channel servo driver, and I decided to add current sensing capability to this. By continuously monitoring the current drawn by the motor, the circuit should be able detect the increase in current that occurs when the motor is stalled – because the neck has hit an obstruction or the mechanism has jammed – and shut down the servo drive before too much damage is done.

The circuit is split into three parts: Firstly, a current sensing resistor; second, a low-pass filter; thirdly, an amplifier. The output from this is then fed into a standard CAN (Controller Area Network) circuit driven by a PIC chip.

The principle of measuring current with a current sense resistor is very simple: you put a low-value resistor in series with the power supply, and measure the voltage across it. The resistor can be placed either at the power supply's positive terminal ("high-side"), or negative terminal ("low-side"). Using the low side is slightly simpler as one end of the resistor is grounded. It has some disadvantages (the ground voltage level for the circuit being sensed is raised up by the voltage across the resistor, and it cannot measure if the load is shorted to ground), however those aren't a problem for this application.

The current flowing through the motor passes through the current sense resistor, developing a small voltage of V = IR. I have estimated the maximum servo current to be 5A (this will be confirmed when I get the actual servo specifications). With a resistance of 0.05 ohms, this will give a voltage range of 0 – 0.25V for a current of 0 – 5A.

The power rating of the resistor is important here. Power is calculated as P = I2R, so at 5A this would give me a power rating of 5*5*0.05 = 1.25W. This is the minimum wattage for the current sense resistor.

Before being amplified, the signal passes through a 7Hz low-pass RC filter. This is simply a 220 ohm resistor and a 100uF capacitor arranged so as to block any high frequency noise that may be present on the sensor, for example voltage spikes caused by the servo motor's operation. The motor will only need to be sampled a few times per second, so I chose 7Hz as the cut-off frequency for the filter to avoid any aliasing effects when sampling. Aliasing occurs when signals are sampled more slowly than half their maximum frequency component, and causes the high frequencies to appear as if they were low frequency changes, giving you an erroneous result.

The next stage is a non-inverting amplifier. An amplifier is necessary here because the chip's analogue to digital converter is not especially precise, so amplifying the signal from 0 – 0.25V into the range 0 – 2.5V allows me to use more of its range, giving a more precise current measurement.

Because the amplifier output needs to operate down to zero volts, I chose the LM358 dual operational amplifier. This 8-pin IC contains two amplifiers with an output swing of 0V to Vcc -1.5V. Normal amplifiers cannot drive their output to the power rails, so a "rail to rail" amplifier is needed for an application like this – however in this instance I only need to include the 0V rail, so the LM358 will do the job just fine.

The two feedback resistors are arranged to give me an amplifier gain of 10.

Finally, the signal passes through a 20 ohm resistor before being fed into the PIC chip's analogue to digital converter. The 20 ohm resistor is recommended to ensure the amplifier remains stable when driving a capacitive load.